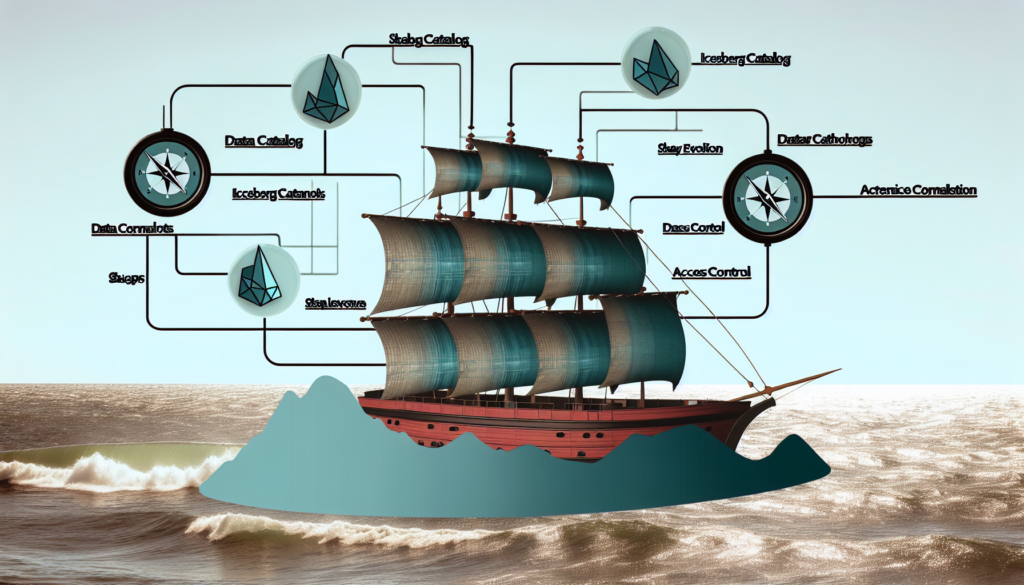

Navigating the Evolution of Apache Iceberg and Data Lakehouse Architecture 2024

Exploring the Depths of Iceberg Catalog Management in Modern Data Ecosystems

As we delve into the modern data ecosystems of Los Angeles, the significance of Iceberg Catalog Management becomes increasingly apparent. With the rise of data lakehouse architecture, organizations are seeking more efficient ways to manage vast data repositories. Apache Iceberg, an open table format, has emerged as a game-changer in this landscape.

The Dremio Blog highlights the importance of effective lakehouse management, likening Dremio’s integrated Nessie-based catalog system to ‘Git for Data’. This innovative approach allows for visualization of branch histories and robust dataset version management, addressing common challenges in the field. “[Dremio’s] management features […] empower organizations to harness their data for strategic advantage.”

As we continue to explore the depths of Iceberg Catalog Management, it’s clear that the tools and methodologies we adopt today will define the data-driven decision-making of tomorrow.

Enhancing Performance and Scalability with Apache Iceberg Catalogs in Lakehouse Architectures

The performance and scalability of data management systems are pivotal for businesses that rely on data analytics. Apache Iceberg catalogs offer a robust solution, providing a three-layer architecture that simplifies data operations. According to an article on Medium via Towards Data Science, Apache Iceberg stands out among open table formats, which also include Hudi and Delta Lake. “Apart from Apache Iceberg, other currently popular open table formats are Hudi and Delta Lake.”

This architectural approach enhances the performance by streamlining data access and improving query execution times. Furthermore, it allows for seamless scalability, catering to the ever-growing data needs of organizations in Los Angeles and beyond.

Embracing Apache Iceberg within lakehouse architectures is not just about keeping up with the data demands of today but also about future-proofing our data infrastructure for the challenges of tomorrow.

Embracing Schema Evolution with Iceberg for Data Consistency and Reliability

Schema evolution is a critical aspect of modern data management, ensuring data consistency and reliability as schemas change over time. Apache Iceberg’s approach to schema evolution allows businesses to adapt their data models without the risk of data corruption or loss.

The Dremio Blog provides insights into the utilization of Apache Iceberg on Databricks, emphasizing the ease of integration and configuration adjustments required to leverage Iceberg’s capabilities natively. “By adding the Iceberg jar and tweaking the appropriate Spark configurations, you can use Databricks Spark with Iceberg natively.”

Embracing schema evolution with Iceberg is not just a technical necessity; it’s a strategic move that ensures data integrity and supports the dynamic nature of business requirements.

Strengthening Data Governance with Iceberg Catalogs in Lakehouse Environments

In the realm of data governance, Apache Iceberg catalogs serve as a cornerstone for establishing robust policies and procedures. The integration of Iceberg with MinIO, as detailed in the MINIO Blog, illustrates the potential for building multi-cloud data lakes that adhere to strict governance standards. “It supports the lingua franca of data analysis, SQL, as well as key features like full schema evolution, hidden partitioning, time travel, and rollback and data compaction.”

With features like schema evolution and time travel, Iceberg catalogs enable organizations to maintain historical data accuracy and facilitate compliance with regulatory requirements.

Strengthening data governance is not merely about compliance; it’s about building trust in data assets and ensuring that they are leveraged responsibly and ethically for the benefit of all stakeholders.

Charting the Future: Trends and Innovations in Apache Iceberg Catalog Management

Looking ahead, the trends and innovations in Apache Iceberg catalog management promise to redefine the data landscape. As organizations in Los Angeles and around the world continue to generate and consume data at unprecedented rates, the need for advanced catalog management solutions becomes more critical.

Applied AI Lab’s YouTube tutorial on Apache Iceberg catalogs offers a glimpse into the practical applications of these tools for data management. The tutorial underscores the importance of staying abreast of the latest developments in the field. “Apache Iceberg Tutorial: Learn How to Use Catalogs for Data Management”

As we chart the future of data management, it is clear that the innovations in Apache Iceberg catalog management will play a pivotal role in shaping the data-driven decision-making processes of tomorrow.

For organizations seeking to harness the full potential of Apache Iceberg and data lakehouse architecture, Bee Techy stands ready to assist. Our expertise in the latest data management technologies ensures that your business stays ahead of the curve. Contact us for a quote and let’s embark on a journey to transform your data ecosystem.