Exploring the Intersection of AI, Ethics, and Law: The ChatGPT Legal Saga

Uncovering the Cyber Intrigue: The NYT ChatGPT Lawsuit Unfolds

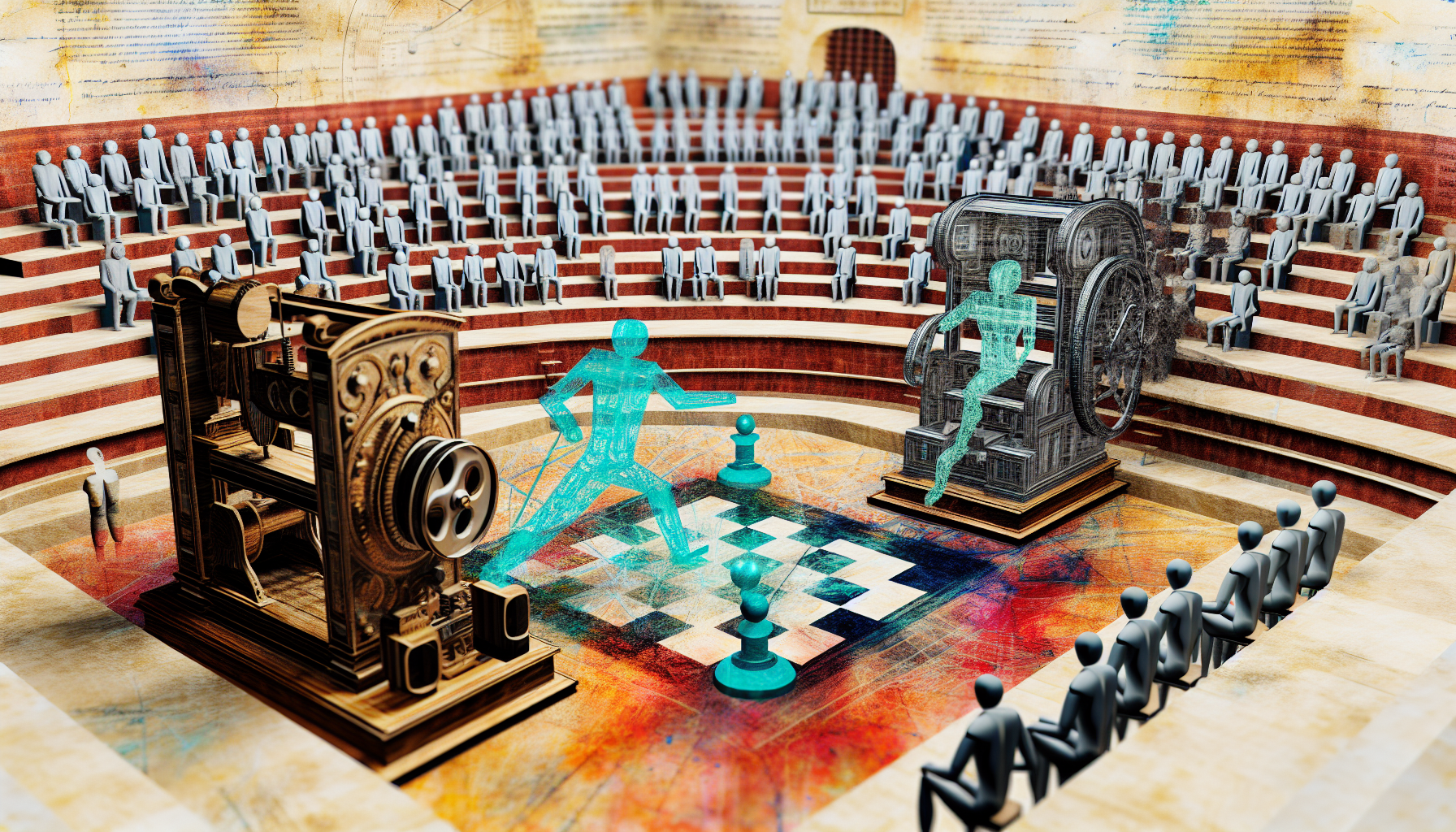

Recent developments in the AI industry have brought to light a contentious legal battle that has the tech community abuzz. The New York Times ‘hacked’ ChatGPT, as OpenAI alleges, in a bid to expose potential legal loopholes within the AI’s framework. This unprecedented move has raised questions about the limits and liabilities in AI research and journalism.

The lawsuit suggests that the newspaper made tens of thousands of attempts to manipulate ChatGPT, prompting OpenAI to seek a dismissal on certain charges. As the case unfolds, it reveals the complexities of copyright law in the digital age and the role of AI in potentially reshaping these legal boundaries.

While the legal aspects are being debated in court, the ethical considerations of such actions cannot be overlooked. The idea of ‘hacking’ an AI for journalistic purposes walks a tightrope between investigative reporting and potential overreach.

Ethical Hacking AI: The Fine Line Between Research and Manipulation

The term ‘ethical hacking’ typically conjures images of cybersecurity experts testing systems to improve their defenses. However, when it comes to AI, the concept takes on a new dimension. Ethical hacking of AI systems, like ChatGPT, involves probing the technology to understand its responses, biases, and potential for misuse.

Yet, when does this probing cross into manipulation? The actions of the New York Times, as OpenAI claims, may have ventured into this gray area. The accusation of prompt hacking by the publication to generate anomalous outputs for a lawsuit puts the spotlight on the delicate balance between research and the potential for creating misleading narratives.

As AI continues to evolve, establishing clear ethical guidelines for its interrogation will be crucial, not just for legal protection but also for maintaining public trust in the technology and the entities that wield it.

The OpenAI Litigation Case: ChatGPT Under Legal Scrutiny

The OpenAI litigation case has brought ChatGPT squarely under the legal microscope. OpenAI’s defense points to the Times’ alleged use of ‘prompt hacking’ to generate outputs that resemble old articles, an action they argue is not indicative of the AI’s typical behavior.

This case is not just about one company versus another; it’s about setting precedents for AI’s role in content creation and copyright. The outputs in question—data regurgitation and model hallucination—are problems that OpenAI acknowledges and is actively working to address. But the legal implications of these issues are still largely uncharted territory.

With AI’s rapidly increasing capabilities, the outcomes of this case could have far-reaching consequences for creators and users of AI alike, particularly in the realm of intellectual property rights.

AI Legal Issues Los Angeles: A New Frontier in Technology Law

In Los Angeles, a city known for its innovative tech scene, AI legal issues are becoming increasingly prominent. As AI technologies become more integrated into various industries, legal professionals are facing a new frontier that requires a deep understanding of both technology and law.

The ChatGPT lawsuit is a prime example of the kind of legal challenges that can arise. It’s not just about understanding the intricacies of AI behavior but also about interpreting how existing laws apply to novel situations created by AI systems. Los Angeles is poised to become a key battleground for these discussions, with its blend of tech companies, legal expertise, and creative industries.

For businesses and individuals alike, navigating this complex legal landscape will require the guidance of skilled professionals who are well-versed in both the technical and legal aspects of AI.

AI Journalistic Integrity: Balancing Investigation and Privacy in the Digital Age

Journalism has always played a critical role in bringing important issues to light, and AI has become a powerful tool in this endeavor. However, the ChatGPT lawsuit raises important questions about AI journalistic integrity. How far is too far when it comes to investigating AI systems, and where do we draw the line to ensure privacy and respect for intellectual property?

The potential for AI to infringe on individual privacy or to inadvertently disclose sensitive information is a concern that journalists must navigate carefully. The ethics of AI interrogation in journalism involve a delicate balance between the public’s right to know and the individual’s right to privacy.

As we continue to grapple with these issues, it becomes clear that the responsible use of AI in journalism will be a defining factor in maintaining the trust and credibility of the press in the digital age.

For those intrigued by the evolving landscape of AI, ethics, and law, Bee Techy offers expert software development and consulting services. We understand the complexities of these issues and are here to help businesses navigate the legal and ethical challenges of AI technology.

Interested in discussing how we can assist with your AI projects or legal concerns? Visit us at https://beetechy.com/get-quote to contact us for a quote.