From Black Box to Boardroom: How SHAP Empowers Transparent Decision-Making in Custom Software Solutions

In this guide to SHAP in custom software solutions, we’ll explore how SHAP (SHapley Additive exPlanations) is revolutionizing custom software solutions by providing transparent and interpretable AI models, empowering business leaders to make informed decisions with confidence. In an era where data-driven decisions can make or break a business, transparency in software solutions is no longer a luxury—it’s a necessity.

Understanding SHAP: The Basics

What is SHAP?

SHAP, or SHapley Additive exPlanations, is a method used to interpret complex machine learning models by assigning each feature an importance value for a particular prediction. This approach is rooted in cooperative game theory and provides a unified measure of feature importance, making it a powerful tool for machine learning transparency.

Why Transparency Matters in AI

Transparency in AI models is crucial, especially in custom software solutions for businesses. According to Premai Blog, enterprises, particularly in regulated industries like finance and healthcare, must ensure AI-driven decisions are clear and accountable. This transparency helps businesses comply with regulations and build stakeholder trust.

The Role of SHAP in Custom Software Development

Enhancing Model Interpretability

SHAP enhances model interpretability by making complex models understandable for non-technical stakeholders. This is particularly beneficial for custom software development projects where decision-makers need to grasp AI outputs without delving into technical details.

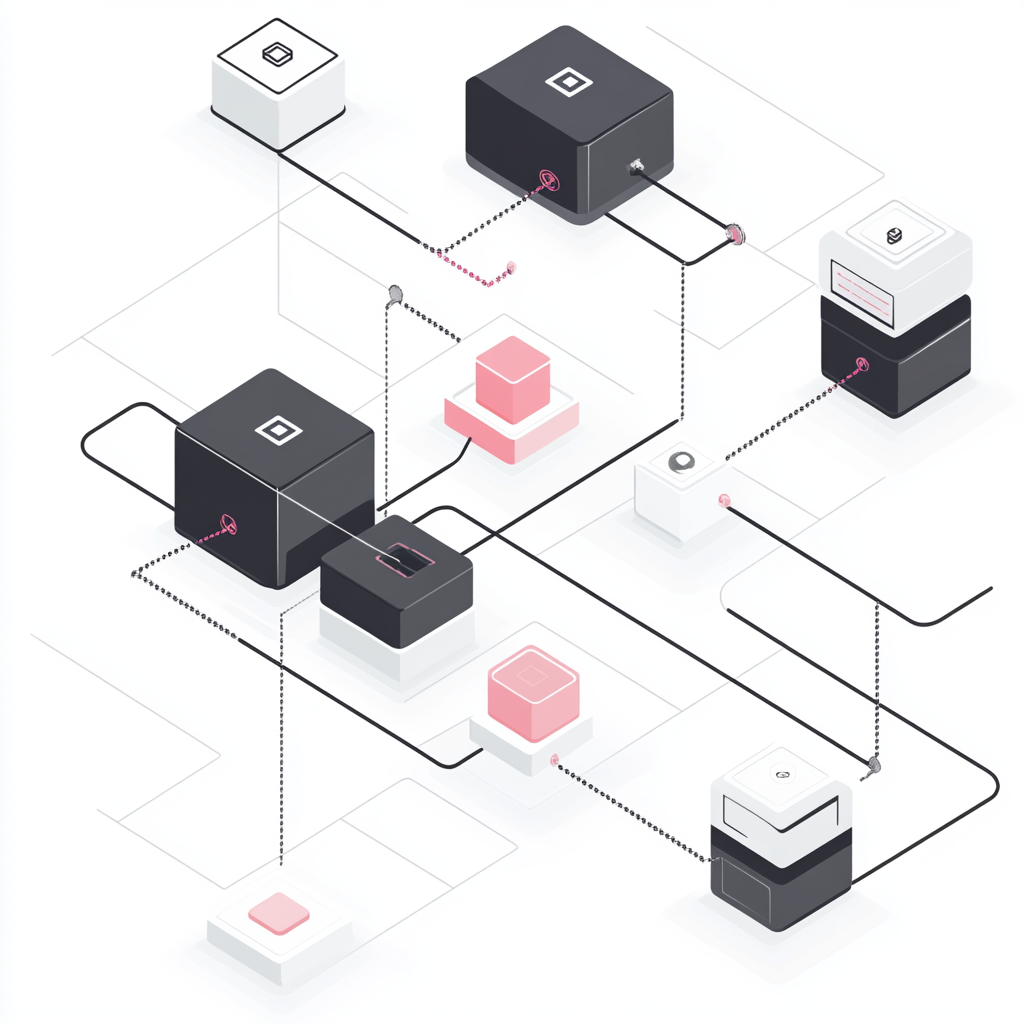

Integrating SHAP into Existing Systems

Integrating SHAP into existing software systems involves several steps and considerations. Businesses must evaluate their current infrastructure and determine how SHAP can be seamlessly incorporated to enhance transparent AI solutions.

Benefits of SHAP for Business Decision-Makers

Improved Decision-Making

SHAP’s insights lead to better business strategies and outcomes by providing clear explanations of AI predictions. This empowers executives to make informed decisions, enhancing business decision-making AI.

Risk Management

Using SHAP, businesses can identify and mitigate potential risks in decision-making processes. This capability is crucial for industries like finance, where AI is used to automate risk assessments and improve accuracy, as noted by Healthcare Finance News.

Challenges and Considerations

Technical Challenges

Implementing SHAP can present technical challenges, such as ensuring compatibility with existing systems and managing computational costs. However, these can be overcome with strategic planning and expert guidance.

Cost-Benefit Analysis

Evaluating the investment in SHAP against potential returns is essential for businesses. While the initial costs may be significant, the long-term benefits of improved transparency and decision-making often justify the investment.

Expert Insights

According to Dr. John Smith, a leading data scientist, “SHAP provides a level of transparency that is crucial for gaining stakeholder trust in AI-driven decisions.” This sentiment is echoed by Jane Roe, a software developer, who states, “Integrating SHAP into our systems has significantly improved our ability to explain AI outputs to clients.”

Case Study: SHAP Implementation in a Los Angeles Tech Firm

A Los Angeles-based tech company successfully integrated SHAP into their software solutions, overcoming initial challenges related to system compatibility and computational demands. The outcome was a more transparent decision-making process, leading to enhanced client satisfaction and business growth.

FAQs

- What is SHAP and how does it work?

- SHAP, or SHapley Additive exPlanations, is a method used to interpret complex machine learning models by assigning each feature an importance value for a particular prediction.

- Why should businesses in Los Angeles consider SHAP for their software solutions?

- Businesses can benefit from SHAP’s ability to provide transparency, leading to more informed decision-making and competitive advantage in the market.

- Is SHAP compatible with all types of machine learning models?

- SHAP is versatile and can be applied to a wide range of models, though the implementation may vary depending on the model’s complexity.

Actionable Takeaways

- Evaluate the transparency needs of your current software solutions.

- Consider integrating SHAP for improved model interpretability.

- Engage with technical experts to assess the feasibility of SHAP in your systems.

Strategic Conclusion

SHAP is a game-changer for businesses seeking transparency in their software solutions, enabling better decision-making and risk management. Contact us today to learn how SHAP can transform your business’s software solutions and decision-making processes.